Generative AI Builds Bridges Between Creative Mediums

On architecting a new Internet paradigm for rapid content creation and sharing.

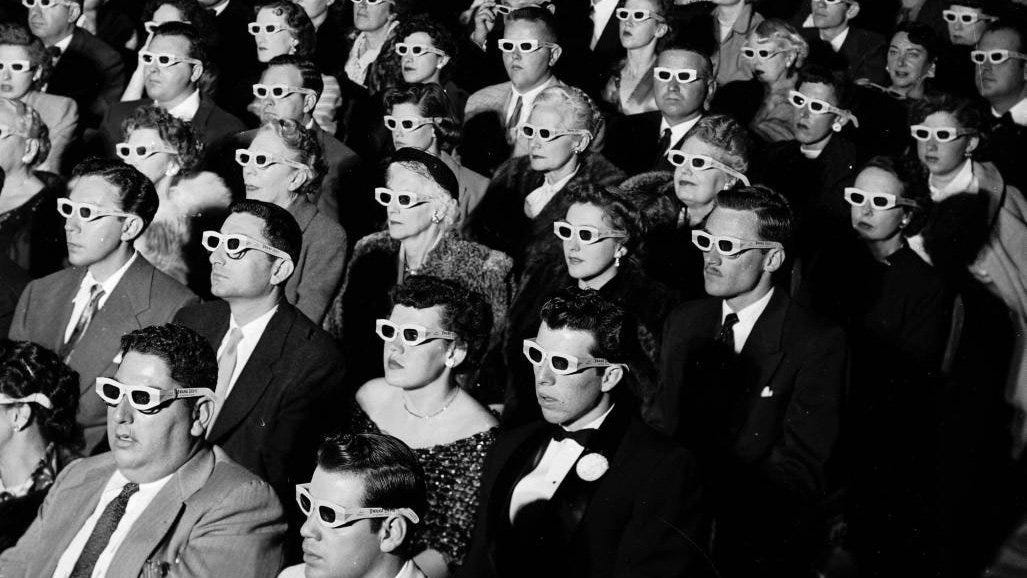

Technically the first 3D feature was The Power of Love (1922), which arrived a full 30 years before the golden age from 1952 to 1954. A silent film, it premiered in Los Angeles and offered viewers two alternate endings depending on whether they wanted a happy or sad finale. To achieve this, the audience would look through either the green or the red lens of the 3D viewing glasses. The movie was not a success, with both the 3D and 2D versions of the feature lost during attempts at distribution.

The last few months have been an absolute joyride for those interested in the AI space. The throughput of high-quality research paired with shipped products is surreal. Just take a look at Ben’s Bites to see the rapid progress this industry has made.

As the saying goes: software is eating the world, and AI is eating software.

On the text-to-text front, we have large language models (LLMs) that create output indistinguishable from real personalities or fictional characters (Character.AI, Circle Labs). Do we have software that can pass the Turing test? Answer: sometimes (depends on your definition of the Turing test). Writer’s block might be a thing of the past as tools like Lex allow an author to autogenerate many possible paths a half-baked article might take.

We can also convert text into image with zero technical know-how, thanks to services like DALL-E, Stable Diffusion, and Midjourney. These tools are being embedded into the workflow of artists. My favorite example of this is in game design. You can use Stable Diffusion and DreamBooth (two generative AI models) to generate art for potions (above), characters, or landscapes. Image-to-text has also been conquered with CLIP and CLIP Interrogator, which is phenomenal for deriving prompts from source images that can then be fed into a text-to-image model.

Microsoft, Adobe, and Canva have all begun to build features that incorporate generative AI into their dashboards.

Speech-to-text is now a solved problem with OpenAI’s Whisper. We can even go from text to a video or 3D model (with shaders)!

Bridges Between Creative Mediums

The overarching theme here is that AI can be used as a multi-modal bridge. As a species, we’re interested in converting one medium to another. In our society we have human roles for these bridges. Scribes are hired to write down text associated with an audio source. Narrators orate a script, thus performing text to speech. Digital designers craft an idea (often first laid out in words in some kind of spec) to image. 3D designers do the same but for 3D renderings.

AI can do it all, bolstering the toolkit of those in the careers mentioned above by providing a rough first draft for certain tasks. Text-to-speech, speech-to-video, text-to-audio, image-to-3d object, and so it goes.

When the input and output medium are the same (e.g. text-to-text, image-to-image), the bridge metaphor seems to break down. However, this isn’t the case. I argue generative AI will serve as an indispensable tool for creative exploration, bridging a creator’s existing body of work with ideas just beyond their grasp.

The well of inspiration creatives can draw from is now the union of their past experiences and the latent space of these generative models.

A human still needs to shepherd generated ideas into a more cohesive and personalized structure. In these cases, generative AI connects what a creator knows about a topic with ideas they should/could know that might not be immediately remembered or previously learned.

Through careful prompt engineering, LLMs can rewrite content for new audiences. For example, GPT-3 can summarize a piece of writing for a second grader. Well designed prompts allow a creator to guide LLMs to shape or reframe text with specific intent. The prompt engineer doesn’t know what the output of an LLM for a given input will be. However, with the right prompt, they can provide momentum in the right direction and provide an intuition for how the output should differ from the input.

Hence, models with the same type of input and output are still a bridge between two modes: the known and unknown.

Text-to-code is a fascinating example of this as well. In the future, I strongly believe that most coders will spend very, very little time learning new languages. With tools like Github Copilot or Replit’s Ghostwriter, they can easily grok the syntax and methodologies associated with a new language. Programmers can focus on designing complex systems, developing conceptual understandings, and correcting logic instead of wasting time on the specificities or lexical oddities of new languages. Text-to-code models allow programmers to bridge over their existing knowledge about computers to new interfaces/languages.

Transformers, the neural network architectural innovation that has driven this Cambrian explosion in generative AI, are quite literally transforming how we can view and digest content. These models have proven themselves to be akin to a Babel fish for the digital age.

Creators can create content in one medium they are comfortable working in, and consumers can view it in another. I’m excited by this notion. Imagine that in the future we might be able to turn blog posts like this into a YouTube video with little to no effort on my end. I could drive multichannel distribution with a single piece of content.

This diagram shows a mapping of different modes (text, image, etc.) and models that convert between them. Even if there isn’t a model going from X → Y, you can often find an intermediary mode to facilitate a conversion X → A → Y. This intermediary mode is nearly always text. Note how many arrows go either into text or out of text. Text is the universal interface.

A Force Multiplier on Creative Work

Web 1.0 allowed us to read content at a global scale (read). Web 2.0 was designed to be a dynamic, interactive system, where anyone could post their own content (read+write). Generative AI enables anyone to create their own content in one domain, and recreate it over and over again for different audiences and distribution channels (read+write+generate). I think this could be called either the Generative Web, or the Personalized Web (since Web 3.0 is a semantically overloaded term).

Whether this involves a simple translation task or a more complex AI-driven modality conversion depends on the creator. There are many new choices popping up that have never been seen before.

The amount of leverage derivable from one article, picture, podcast, video, or tweet will explode in the coming years. Content has become interoperable between modes and can be consumed in novel ways. We’re moving towards a modality agnostic future.

Thanks to David, Michelle, and Bryan for reading early drafts and providing valuable feedback.