How has AI changed the way we work, collaborate, and live?

I’d argue most people have been yet to be impacted by any sort of AI whatsoever.

The promise of an AI augmented future, if it is ever coming, isn’t here yet.

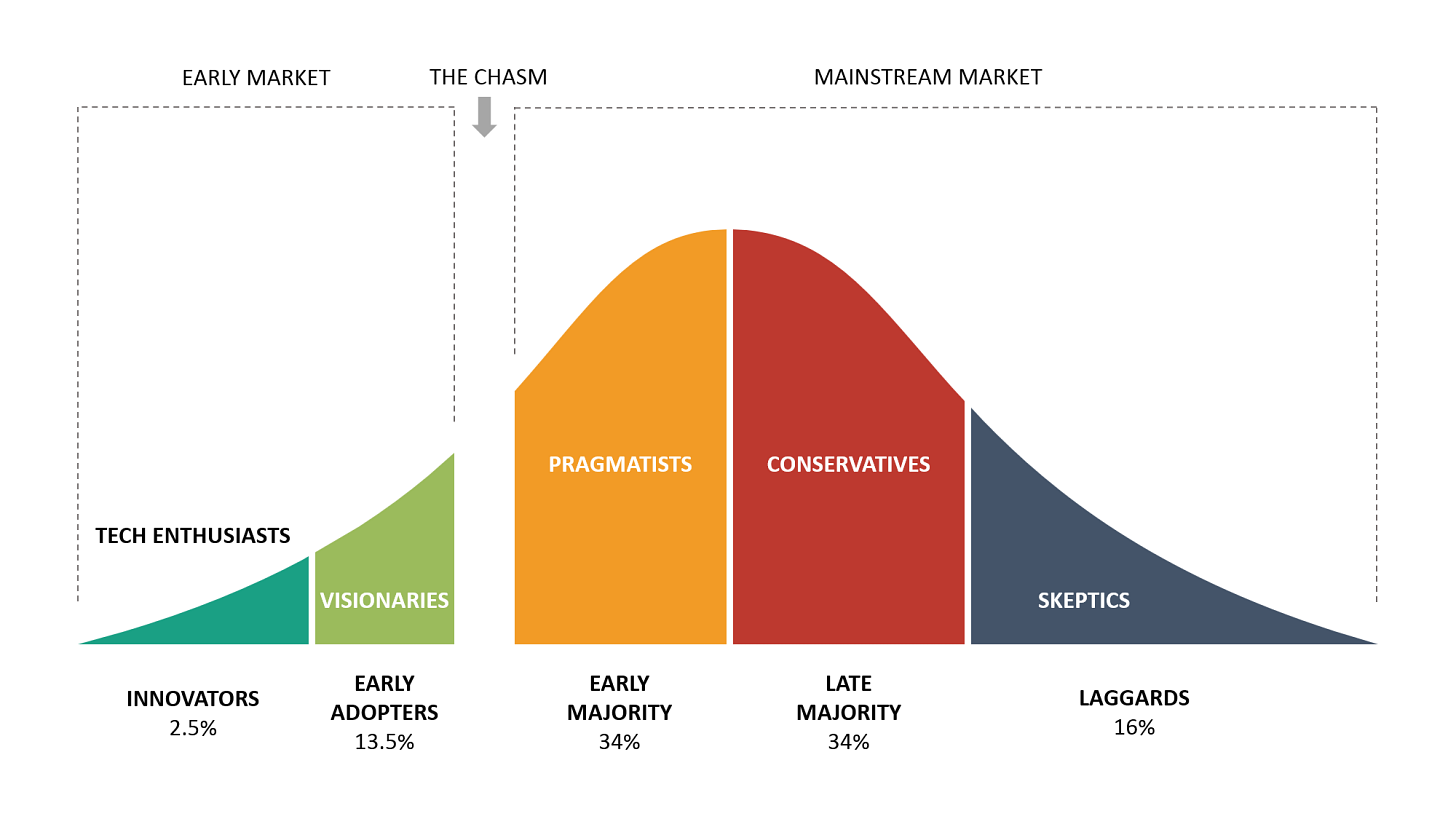

I’d consider my close friends and I to be far in the left tail of the distribution above: eager to try new tools and adopt them into our day-to-day routines.

We only use a few AI tools on a daily basis:

ChatGPT. Mostly for code generation and basic Q&A.

Copilot. The UX for Copilot is perfect. It stays out of your way until you need it. This means your efficiency is, at worst, as bad as it was pre-Copilot1.

I also use the following, but much more infrequently.

I find Claude to be better than ChatGPT at summarization and explanations. It’s more “human” in its responses.

I’ve been reaching for Perplexity more often in the last few weeks. It feels less like a Google replacement and more like a better Quora.2 Unfortunately, I don’t find it very sticky as a product.

Cursor is awesome, and while I’ve been using it more than vanilla VSCode, I don’t use the built-in AI tooling often. But when I do, it’s magical.

Depending on the task, AI tools save me 5-25% of my time. This is a huge deal.

I spend more time on the creative parts of my work and less time on repetitive or mundane tasks. I can quickly generate boilerplate code, summarize long pages of text, and compose polished emails.

It’s shocking to me that most people aren’t having the same experience.

AI products haven’t proliferated into the mainstream. I don’t think most engineers, much less those in other disciplines, are using these models to their advantage.

I’ve shown my parents ChatGPT, and they’re both in careers where it could be super helpful3. It hasn’t been sticky for either of them, even after the “wow” factor.

Moreover, there are basically no new AI products that I use apart from those I mentioned above. These products (ChatGPT and Copilot, specifically) were the ones that kicked off this golden age of generative AI. Why haven’t people latched onto anything since?

Where are the AI apps?

Life doesn’t feel that different compared to a year ago, when I was first incorporating LLMs into my life.

Why don’t I feel like Tony Stark or John Anderton yet?4

There are three possible paths forward:

Hypothesis 1: the AI promise will be delivered this year.

Hypothesis 2: AI is currently in a nurture phase. We’ll see game-changing products within the next 5-10 years.

Hypothesis 3: it’s never coming. We’ve bought into a fugazi.

Let’s dissect each of these points in more detail.

Hypothesis 1: The Promised Land Is Near

Most AI startup founders today hope this is the answer.

The world’s brightest technical and product minds are jumping ship from their previous job to start new companies in generative AI. Flashy demos that could’ve only been dreamed a year ago are key in raising exorbitant amounts of capital.

Venture firms have hitched themselves to various horses — agent orchestration infra, code generation apps, database chatbots, etc. — based more on hype than proven utility or defensibility. Sky-high valuations assume successful applications will naturally emerge once research translates to production systems.

There are more companies than I can count that are building in stealth with private betas. AI startups are in a really weird state right now where everyone has their own skunkworks operation underway.

A cynical (but perhaps more realistic) perspective is that these startups actually have no product yet. I have a strong suspicion that while GPT-4 is good, it’s not quite good enough for many use cases.

The current era of AI companies is effectively a land grab while waiting for OpenAI to launch GPT-5. Everyone is hoping GPT-5 is a massive step change in model capabilities.

But this line of reasoning is questionable. GPT-3.5 to GPT-4 already represented a major advance, yet few transformative products followed.

There's little reason to believe the change GPT-5 will be radically different. Progress in capabilities doesn't automatically yield commercial viability. It feels like the the disconnect between lab and market remains vast.

We also have no idea what research labs are up to. The GPT-4 and Mistral papers barely shed any light on how the models work, and that prevents people from outside to predict where we might be in the short term.

Today’s startups are trapped in a dark forest.

Hypothesis 2: Patience Is A Virtue

Maybe nothing happens over the next year. Something has to give over the next 5 to 10 years, right?

In many ways, AI is very much in its early innings. It’s only been about a year!

This hypothesis suggests that, as with past innovations like the internet and cloud computing, the spread of AI will take time. We are still in the earliest stages of understanding how LLMs will enable new products and business models.

It might just take longer for businesses to purchase enterprise ChatGPT, for example, and train their employees in using it for within service-heavy workflows.

For example, consulting firms that deploy AI internally will quickly outpace those who do not.

“A lot of people have been asking if AI is really a big deal for the future of work. We have a new paper that strongly suggests the answer is YES. […]

[For] 18 different tasks selected to be realistic samples of the kinds of work done at an elite consulting company, consultants using ChatGPT-4 outperformed those who did not, by a lot. On every dimension. Every way we measured performance.

Consultants using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without. Those are some very big impacts.”

Many startups are pitching AI tools to sectors still unfamiliar with ChatGPT. This is challenging, as these industries often lack the budget for this new category of AI first SaaS.

The situation is similar to skipping straight to microwaves without first understanding fire. It's not just about the cost; there's a foundational level of tech knowledge that needs to be built up before adopting more complex solutions.

Product innovation will also take time.

Most current applications fit into narrow buckets: image generation, character chatbots, code generation. These are just 0th order consequences of basic capabilities.

The obviousness around these problems also explains the large amounts of competition startups in these spaces are facing.

The 1st order consequences — how AI could reimagine UIs, robot process automation (RPA), contextual agents (that actually work), embodied toys, new forms of AI native hardware, diffusion-based rendering engines etc. — remain underexplored.

What do 2nd, 3rd, etc. consequences of LLMs look like? It’s hard to say without time.

Moreover, big incumbents, despite their resources, will struggle to adapt. Many rely on outdated AI talent and paradigms ill-suited to today's models. Rather than acquisition and consolidation, companies currently remain convinced they can build AI products with their existing old guard of engineers. This will slow them down even more. This is a rare opportunity for AI first companies.

Hypothesis 3: The Emperor Has No Clothes

AI in its current state tends to provide a high variance enhancement — occasionally incredible in demos, but severely unreliable in practice. Low variance is key for business viability.

Like a Broadway musical, LLMs need to deliver a reliable, high-quality experience night after night. Audiences expect the same vocals, choreography, and precision at each performance. There is little tolerance for inconsistency.

In contrast, a Hollywood film allows countless takes until the best possible scene is stitched together. The end result showcases only the perfect moments, hiding the messy process behind the scenes.

Many of today’s AI demos feel like spliced-together movie magic.

Several other issues obstruct AI's path to broad impact.

First, business challenges.

Consider automating an entire call center. First of all, how much money are you realistically saving? In a traditional call center, if a worker messes up, there is someone to blame. Can you blame AI5?

Startups need to consider these questions and have good answers ready for potential buyers. Most importantly: is your product capable of solving a high value task, repeatedly, at scale? Erik Hoel has a great piece on this.

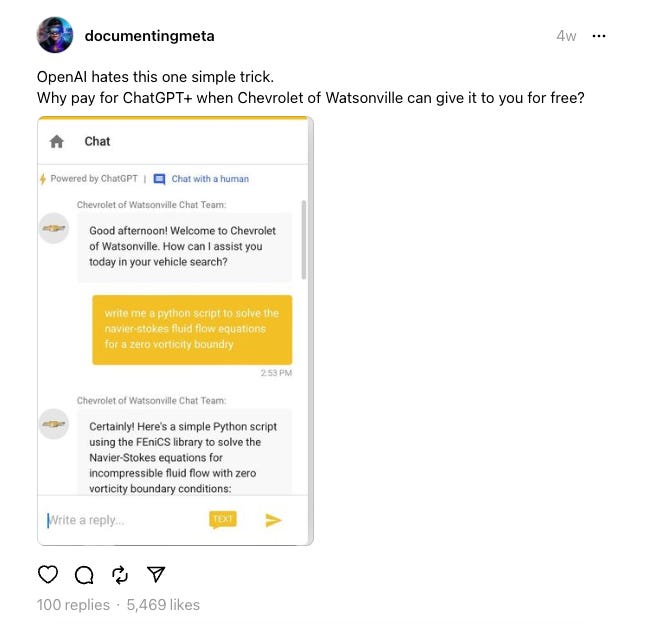

Second, technical shortcomings. Existing models are non-deterministic and easily manipulated — too open-ended for robust performance. We lack solutions for core UX problems. Chat remains a clunky interface for most applications.

Finally, models themselves have innate limitations. Current approaches likely hit ceilings in capability and general applicability. We may need fundamentally new techniques to create widely usable AI.

Without another technological step function breakthrough similar to the Transformer, we shouldn’t expect much from this cycle of AI, apart from the applications we’re already using.

My take

In my opinion, I think hypothesis 2 is most likely the one to play out. I don’t think everything will go up in flames (hypothesis 3), but I also don’t believe we should expect more products with novel uses in the coming months (hypothesis 1).

Software engineering has seen the most impact so far because AI researchers come from that world — cross-disciplinary collaboration will widen AI's reach. My personal productivity gains are almost entirely in writing and understanding code.

David summarizes this viewpoint pretty well in his recent survey of AI builders (which I highly recommend you check out):

“ChatGPT is still the most used app. (40% of respondents mentioned it as an app they use most frequently). AI is likely going to be a 5 to 10-year adoption cycle. There are still only 1-2 dominant players in each application area or modality (text, audio, image, video, etc.) so far. We are still only building for our own use cases as engineers (almost 30% of respondents use AI for coding use cases). We haven’t even picked the low-hanging fruit yet for non-techie use cases.”6

What happens next?

When evaluating potential applications, ask: what has to happen next? Where do we go from here?

For example, after Midjourney’s success in generating images, it feels obvious that the next form of AI-driven media generation would be in video or 3D.

Hence, Pika Labs and Luma Labs.

Applying this logic elsewhere, note how AI has dramatically improves software engineering productivity. Similarly, specialized LLMs are likely to unlock productivity gains in other high-value white-collar jobs. Harvey appears to be doing this in legal. It’s only a matter of time before similar tools are established in the medical and financial sectors.

Ideas without the right maturation of capabilities won't find product-market fit. The key is recognizing which applications are on the cusp of viability at a given moment based on the evolution of the technology. It will also take time for the market to be educated on the possibilities these tools open up.

The promised land is closer than we think, but won't materialize overnight.

Thanks to David, Bryan, and Samay for reading early versions and sharing thoughts on this topic over the last few months.

A research article from last week suggests that this might not be the case, and that Copilot might actually exert “downward pressure on code quality”.

Contrary to what most people have been saying, I actually find Google and ChatGPT to be complementary, not substitutes. I use Google to track information down like specific news, restaurants, etc. I use ChatGPT to transform, combine, and extrapolate information I already have at hand. See tweet.

My friend anecdotally mentioned that his dad uses ChatGPT every single day to proofread emails. English isn’t his first language. The ability to write fluent emails without any anxiety is empowering.

If you are reading this and use AI apps apart from ChatGPT, Claude, or Copilot, let me know. How do you use it? What can it do that other tools cannot? Most importantly, why do you think other people aren’t using it?

Interestingly, this echos an argument from crypto: who do you blame when an autonomous system (e.g. the blockchain) harms you?

My favorite “low-hanging fruit” is the speakularity. OpenAI’s Whisper has brought us cheap and highly accurate speech-to-text software. There are many, many small micro-SaaS companies that can be built on Whisper that provide a truly differentiated experience.

When I open a new ChatGPT session, I'm sometimes reminded of starting up a computer in the early 80s, where all you were presented with was a little blinking cursor. Most people had no idea what to do with it.

Today's ChatGPT experience is not dissimilar, and I wonder if the UI has yet to progress before it starts making serious beyond the early adopters (like me).

excellent take!!